Compaction in Java Garbage Collection

轉自:http://www.deepheap.com/2008/09/ghost-in-java-virtual-machine.html

Compaction is a lesser known facet of garbage collection. In this article, I'll discuss how important it is for Java performance. Left untamed, compaction can come back to haunt you. Find out how it works, where the problems lurk and how to make them go away.

The good, the bad and the ugly sister

Working with high-volume, server-side applications, I often come across performance issues due to mistuned Java virtual machines. These types of applications, especially when acting as back-end servers for web sites, are particularly susceptible to garbage collection hiccups. This is because they can be particularly demanding of the JVM. They require both a high degree of responsiveness and high throughput; the software engineering equivalent of having your cake and eating it too. This problem is exacerbated if the garbage collector is required to manage a large working set (i.e. a large heap with many long-lived objects). Having a large working set is like asking for a cherry on top of that proverbial cake.

While much has been written about garbage collection algorithms in the realm of performance tuning, compaction is the lesser known ugly sister. It turns out that compaction plays an important role in getting high performance out of garbage collectors. With respect to those highly demanding Java applications, this article explores the good and the bad of the ugly sister compaction.

Evil dark matter

In the decades of research of garbage collection in academia, researchers made an interesting observation about memory allocation. This came to be formulated in the weak generational hypothesis. It simply states that most objects die young. In other words, the vast majority of memory is only needed for a very short amount of time. For object oriented languages, including Java, the correlation is even stronger; as much as 98% of object life cycles are short lived. Since object turnover is high, there is consequential high turnover of memory usage. The natural side-effect is fragmentation within the heap. Much like fragmentation on a file system, heap fragmentation can be viewed as holes of de-allocated memory nestled between chunks of allocated memory. It is sometimes referred to as dark matter. It wastes heap because it takes up space yet is not used by the overlaying application. Garbage collectors cannot naïvely re-allocate that space because dark matter strands can vary in size. Thus a newly allocated object must fit within the fragment. Tiny fragments may become unusable.

Fragmentation is unavoidable. It is considered normal for a high volume, 24/7 type application to waste up to 10% of total heap due fragmentation. At this level of waste, the detrimental effect on the garbage collector will not be felt. It is the cost of doing business. However, left unchecked, fragmentation can eventually spread across the entire heap and cause the JVM to run out of memory space. At worst, it can incapacitate a Java application. Garbage collection algorithms must deal with this fact and find a solution. This is generally solved in one of two ways: 1) keep track of each individual strand of dark matter and re-allocate them whenever possible or 2) copy live objects to another part of memory squeezing them adjacently to one another (the basic definition of compaction). There are pros and cons to either approach. Let's explore these.

Re-allocation of dark matter

Since compaction involves moving objects and updating referring objects, it can only be done during a major garbage collection while the entire JVM is paused. Bypassing compaction altogether therefore reduces pause times. The up-front savings can be significant when you consider that compaction can last anywhere from 10 to 100 milliseconds.

In lieu of compaction, dark matter must be tracked via free lists. Free lists are mappings of dark matter of the entire heap. The use of free lists make every object allocation much more expensive because the free list must be scanned to find a hole big enough for the object size being allocated. Additionally, some housekeeping, such as splitting or joining free blocks, may be done to meet allocation demand. This means that threads can starve waiting for memory to be found and allocated. Viewing application performance holistically, it can end up costing much more than compaction.

Another detrimental effect of dark matter re-allocation is locality of related objects. When a thread creates objects simultaneously and links them together, these related objects can end up being physically scattered across the heap in different memory pages. When comes time to address these objects, the operating system must page in all of these objects to access them. If multiple related objects are each in their own page, poor object locality can causes excessive page faults in the operating system. For a system that requires high responsiveness, this scenario is a no-go from the get-go.

Another detrimental effect of dark matter re-allocation is locality of related objects. When a thread creates objects simultaneously and links them together, these related objects can end up being physically scattered across the heap in different memory pages. When comes time to address these objects, the operating system must page in all of these objects to access them. If multiple related objects are each in their own page, poor object locality can causes excessive page faults in the operating system. For a system that requires high responsiveness, this scenario is a no-go from the get-go.

Better performance through compaction

On the other hand, there are several benefits accrued if a garbage collector performs regular compaction. First, the heap is constantly being re-organized. Densely populated object areas are kept in one area of the heap and empty areas in the other. This can smoothen out application performance. This is primarily due to the fact that memory allocation is done very efficiently since objects are allocated contiguously across the heap. As such, when threads require memory for several related objects being created simultaneously, the JVM can return sequential cells of memory. Related objects are thus close to one another. Not only is object allocation as quick and easy as incrementing the next free address pointer in the heap, but the additional benefit is reduced page faults when accessing these related objects.

Since compaction increases pause times during major collections, care must be given to how much of the heap is compacted every time. JVM vendors have varying techniques for spreading compaction processing over time. We'll explore how two popular JVMs (Oracle/BEA's Jrockit and Sun's Hotspot) implement compaction. My intent is not to compare performance but rather to expose compaction techniques.

Jrockit compaction

Jrockit designers have gone to great lengths in designing a sophisticated compaction algorithm. This may represent an implicit acknowledgment of the importance of compaction. Regardless of the chosen collection algorithm (gencon, genpar, sincon, and sinpar and derivatives thereof), compaction figures prominently. Jrockit divides the heap into what is called heap parts. Every time a major garbage collection occurs, a heap part is compacted. Moving through the heap sequentially, once per major collection, leads to the eventual compaction of the entire heap. Compaction then restarts from the beginning. It is possible to fine tune the size of each heap part as well as the amount of parts to compacted per collection. This allows compaction fine tuning control not available on Hotspot.

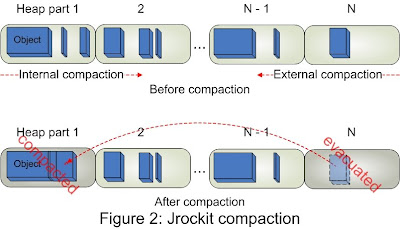

There are two types of compaction algorithms working in tandem over the heap. These are internal and external compaction. They each take turns and run at every other major collection. Internal compaction squeezes all objects to the beginning of the heap part. It is called internal because objects are compacted within the heap part. The second is named external compaction and works by evacuating all objects outside the heap part and onto the beginning of the heap. Both compactors work in a sliding window fashion - internal compaction moves from the beginning to end of the heap while external compaction moves in the opposite direction. When both meet in the middle, the entire heap has been compacted, albeit using different compactors, and the cycle begins again. Figure 2 shows the heap before and after compaction.

Internal compaction has only been introduced version R27. Prior to that version, only external compaction was performed. The thinking behind having two compaction algorithms is that it yields better overall compaction of the entire heap. Internal compaction ensures that objects are close to one another at the beginning of every heap part. This leaves many contiguous empty cells available for allocation in the remaining portion of the heap part and in every heap part. However, internal compaction can leave objects scattered throughout the heap in different heap parts and this reduces locality which increases page faults. External compaction helps alleviate this problem. By relocating all objects from the end of the heap to the beginning, it clears out sparsely populated areas. Having both types of compaction can yield a good mix of contiguous free memory and good object locality.

Hotspot compaction

Sun's Hotspot JVM compaction algorithm is simpler but not available on all garbage collectors. It cannot be fine-tuned - except by turning it off by using a non-compacting collector such as the Concurrent Mark Sweep (or CMS) collector. It keeps fragmentation under control via free lists. On the other hand, the Serial Collector, Parallel Collector and the Parallel Compacting Collector performs compaction during every major collection.

Sun's Hotspot JVM compaction algorithm is simpler but not available on all garbage collectors. It cannot be fine-tuned - except by turning it off by using a non-compacting collector such as the Concurrent Mark Sweep (or CMS) collector. It keeps fragmentation under control via free lists. On the other hand, the Serial Collector, Parallel Collector and the Parallel Compacting Collector performs compaction during every major collection.

Compaction squeezes all live objects towards the beginning part of the heap. However, over time, it can become very densely populated leaving very low levels of fragmentation. A naïve implementation could end up spending too much time compacting dense areas and have no return on investment. Instead, these collectors use what is known as a dense prefix that acts like a watermark denoting the optimal point at which compaction should start. Automatically computed, it allows the compactor to jump right to that watermark and start compacting from that point on until the end of the heap.

Footnotes and anecdotes

Having different compacting strategies results in very different memory layouts. Jrockit memory layout results in clusters of densely populated areas scattered throughout the entire heap. The end of the heap tends to be sparsely populated. Hotspot compaction results in densely populated areas at the beginning of the heap and sparsely populated areas at the end. Of course, the density of the areas depend on the application's footprint and the amount of live objects is has. As they say in those get-rich-quick infomercial, individual results may vary.

Getting the right compaction behaviour can be tricky. With Jrockit, for example, mistuned compaction can cause abnormal CPU usage spikes to come and go. Spikes can come as the external compactor visits and compacts densely populated heap parts. When the compactor traverses less dense areas, the CPU usage goes back down. This results in uneven pause times and robs the overlaying application of precious CPU time when dense areas are being traversed. Fortunately, it is possible to fine tune compaction by dividing the heap into more parts thereby making each part smaller. By doubling the amount of heap parts, it effectively makes each part half as dense because it is only half its original size. However, since the compactor will only do half the work, dark matter may increase.

Modern garbage collector design borrows extensively from years of academic research. However, JVM vendor literature remains superficial and somewhat secretive when it comes to describing garbage collection algorithms details - especially compaction. As an independent software engineer, my knowledge is based purely on published garbage collection theory and a healthy dose of real-life experience borne out of solving JVM compaction problems.

Conclusion

Compaction plays an important role in getting high performance and high responsiveness out of Java applications. By understanding it, this is one ghost that can't come back to haunt us.

沒有留言:

發佈留言